NVIDIA DGX, at the forefront of AI innovation, is a powerhouse system designed to accelerate the development and deployment of cutting-edge AI applications. From research labs to enterprise data centers, DGX systems empower organizations to tackle complex challenges and unlock new possibilities with the power of deep learning.

These systems are not just powerful computers; they are meticulously engineered platforms built on NVIDIA’s expertise in GPUs, high-speed interconnects, and a comprehensive software ecosystem. DGX systems provide a complete solution for AI development, from training massive models to deploying real-time inference applications.

NVIDIA DGX Systems Overview

NVIDIA DGX systems are powerful computing platforms designed for accelerating AI and deep learning workloads. They are targeted towards organizations and researchers who require high-performance computing capabilities to tackle complex AI tasks, such as training large language models, developing autonomous vehicles, and analyzing massive datasets.

History of NVIDIA DGX Systems

NVIDIA DGX systems have evolved significantly since their inception. The first generation, NVIDIA DGX-1, was launched in 2016, offering a single-node system with eight Tesla P100 GPUs. Subsequent generations, such as DGX-2 and DGX-A100, have introduced advancements in processing power, memory capacity, and connectivity, enabling even more demanding AI workloads.

Key Features and Benefits of NVIDIA DGX Systems

NVIDIA DGX systems offer several key features and benefits that make them ideal for AI and deep learning applications:

- High-Performance Computing: NVIDIA DGX systems leverage NVIDIA’s latest GPUs, providing massive parallel processing capabilities for accelerating deep learning model training and inference.

- Scalability: DGX systems can be scaled horizontally to accommodate increasing computational demands, allowing for the training of larger and more complex models.

- Optimized Software Stack: NVIDIA provides a comprehensive software stack specifically designed for AI workloads, including CUDA, cuDNN, and TensorRT, which optimize performance and simplify development.

- Integrated Ecosystem: NVIDIA DGX systems are part of a broader ecosystem of AI tools and resources, including NVIDIA Clara, NVIDIA Omniverse, and NVIDIA Triton Inference Server, facilitating seamless integration and collaboration.

NVIDIA DGX System Models and Specifications

NVIDIA offers a range of DGX system models, each tailored to specific computational requirements. The following table Artikels the key specifications of some popular DGX systems:

| Model | GPUs | Memory | Interconnect | Compute Power |

|---|---|---|---|---|

| DGX Station A500 | 8 x A5000 | 96GB HBM2e per GPU | NVLink, NVSwitch | 1.5 PetaFLOPS |

| DGX A100 | 8 x A100 | 40GB HBM2e per GPU | NVLink, NVSwitch | 5 PetaFLOPS |

| DGX-2 | 16 x V100 | 32GB HBM2 per GPU | NVLink, NVSwitch | 2 PetaFLOPS |

Hardware Components and Architecture: Nvidia Dgx

NVIDIA DGX systems are designed for demanding AI workloads and are packed with high-performance hardware components. These components are carefully selected and integrated to provide the best possible performance and efficiency.

The architecture of a DGX system is optimized for parallel processing, enabling it to handle complex AI models and massive datasets. This architecture allows for efficient data flow and communication between the different components, contributing to its exceptional performance.

GPU Architecture

The core of an NVIDIA DGX system is the NVIDIA A100 Tensor Core GPU, which provides immense computational power for AI tasks. The A100 GPU utilizes NVIDIA’s latest architecture, Ampere, featuring advanced features like Tensor Cores and multi-instance GPU (MIG) technology. These features enable the GPU to accelerate deep learning training and inference tasks, handling massive datasets with remarkable speed.

CPU Architecture

NVIDIA DGX systems also include powerful CPUs for general-purpose computing tasks, such as data pre-processing and model management. The CPUs handle tasks that are not GPU-accelerated, ensuring a balanced and efficient system.

Memory, Nvidia dgx

The system boasts a large amount of high-bandwidth memory (HBM2e) directly connected to the GPUs. This high-speed memory allows for rapid data access, minimizing data transfer bottlenecks and enabling the GPUs to operate at peak performance.

Storage

NVIDIA DGX systems utilize a high-performance storage system for storing massive datasets and trained models. This storage system provides high throughput and low latency, ensuring fast access to data for training and inference tasks.

Networking

NVIDIA DGX systems are equipped with high-speed networking capabilities, enabling seamless communication between GPUs and other components. This ensures efficient data transfer and coordination between different parts of the system, contributing to the overall performance and scalability of the system.

NVLink and NVSwitch

NVIDIA’s proprietary technologies, NVLink and NVSwitch, play a crucial role in enhancing the performance and scalability of DGX systems. NVLink is a high-speed interconnect technology that enables GPUs to communicate with each other directly, bypassing the CPU. This direct communication significantly reduces latency and improves data transfer speeds between GPUs, leading to faster training and inference. NVSwitch is a high-bandwidth switch that allows multiple GPUs to be interconnected and communicate efficiently. This technology enables the scaling of DGX systems to handle even more complex AI models and larger datasets.

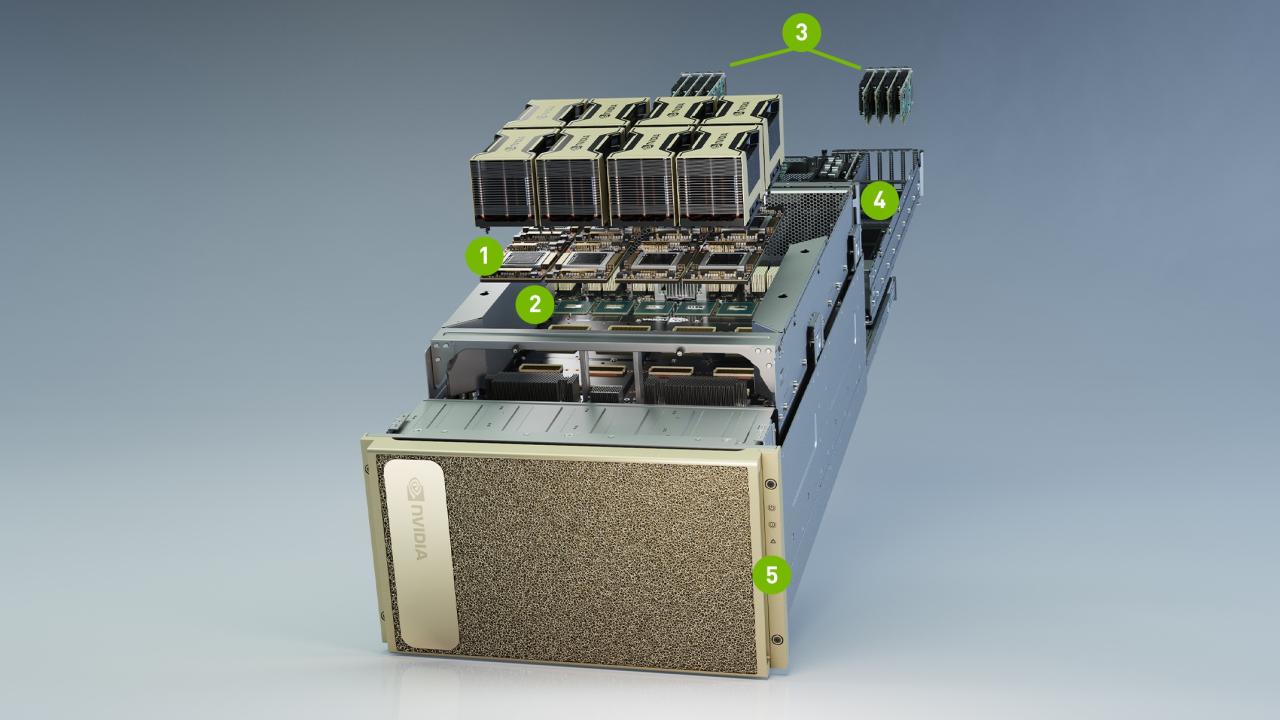

Diagram of an NVIDIA DGX System

[A detailed description of the diagram is needed here]

Software and Ecosystem

NVIDIA DGX systems are not just powerful hardware; they are powered by a comprehensive software stack and a vibrant ecosystem that enables efficient AI development and deployment. This software suite includes operating systems, libraries, frameworks, and tools specifically designed to accelerate AI workflows.

NVIDIA Software Ecosystem

The NVIDIA software ecosystem is a collection of tools and libraries that are designed to work together seamlessly to accelerate AI workloads. It provides developers with a complete set of tools to build, train, and deploy AI models. Some key components of the NVIDIA software ecosystem include:

- CUDA: CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model created by NVIDIA. It allows software developers to use NVIDIA GPUs for general-purpose computing. CUDA provides a low-level interface to the GPU, enabling developers to write highly optimized code for parallel processing.

- cuDNN: cuDNN (CUDA Deep Neural Network) is a GPU-accelerated library of primitives for deep neural networks. It provides highly optimized implementations of common deep learning operations, such as convolution, pooling, and activation functions. cuDNN can significantly accelerate the training and inference of deep learning models.

- TensorRT: TensorRT is a high-performance inference optimizer and runtime that allows for deploying trained deep learning models on NVIDIA GPUs. It optimizes models for inference, reducing latency and increasing throughput. TensorRT supports a wide range of deep learning frameworks and can be used to deploy models on various platforms, including edge devices and cloud servers.

Benefits of NVIDIA Software

Using NVIDIA software for AI development and deployment offers several advantages:

- Performance Optimization: NVIDIA’s software is specifically designed to take advantage of the parallel processing capabilities of NVIDIA GPUs, resulting in significant performance improvements for AI workloads.

- Simplified Development: The NVIDIA software ecosystem provides a comprehensive set of tools and libraries that simplify the development of AI models. Developers can focus on building innovative models rather than dealing with low-level hardware details.

- Scalability and Flexibility: NVIDIA software can be deployed on a wide range of platforms, from single-GPU systems to large-scale clusters. This scalability and flexibility allow for the deployment of AI models in various environments, including cloud, edge, and on-premises deployments.

Popular AI Frameworks and Libraries

Several popular AI frameworks and libraries are optimized for NVIDIA DGX systems, enabling efficient development and deployment of AI models. These frameworks and libraries provide a rich set of tools and features for building, training, and deploying AI models, including:

- TensorFlow: TensorFlow is an open-source machine learning framework developed by Google. It provides a comprehensive set of tools and libraries for building and deploying machine learning models. TensorFlow is highly optimized for NVIDIA GPUs and is widely used for various AI applications, including image recognition, natural language processing, and time series analysis.

- PyTorch: PyTorch is an open-source machine learning framework developed by Facebook. It provides a dynamic computation graph, making it easier to debug and experiment with AI models. PyTorch is also highly optimized for NVIDIA GPUs and is widely used for research and development in AI.

- Keras: Keras is a high-level neural network API written in Python. It provides a user-friendly interface for building and training neural networks. Keras runs on top of other machine learning frameworks, such as TensorFlow and Theano, and is optimized for NVIDIA GPUs.

- MXNet: MXNet is an open-source deep learning framework developed by Apache. It provides a flexible and scalable platform for building and deploying AI models. MXNet is optimized for NVIDIA GPUs and is used in various applications, including natural language processing, image recognition, and machine translation.

Use Cases and Applications

NVIDIA DGX systems are powerful computing platforms designed to accelerate the development and deployment of AI applications across various industries. These systems offer unparalleled performance and scalability, enabling organizations to tackle complex problems and drive innovation in their respective fields.

Healthcare

NVIDIA DGX systems are revolutionizing healthcare by enabling faster and more accurate diagnoses, personalized treatments, and drug discovery.

- Medical Imaging Analysis: DGX systems power AI algorithms for analyzing medical images, such as X-rays, CT scans, and MRIs, to detect abnormalities and assist in early diagnosis of diseases like cancer, heart disease, and neurological disorders.

- Drug Discovery: DGX systems accelerate the process of drug discovery by enabling researchers to simulate complex molecular interactions and predict the effectiveness of potential drug candidates.

- Precision Medicine: DGX systems enable personalized medicine by analyzing patient data to identify genetic predispositions and predict individual responses to specific treatments.

Finance

NVIDIA DGX systems are transforming the financial industry by enabling fraud detection, risk management, and algorithmic trading.

- Fraud Detection: DGX systems power AI algorithms that analyze financial transactions in real-time to identify fraudulent activities, such as credit card fraud and money laundering.

- Risk Management: DGX systems enable financial institutions to assess and manage risk by analyzing market data, customer behavior, and other relevant factors.

- Algorithmic Trading: DGX systems power high-frequency trading algorithms that execute trades at lightning speed, based on real-time market data and complex trading strategies.

Manufacturing

NVIDIA DGX systems are empowering manufacturers to optimize production processes, improve product quality, and enhance predictive maintenance.

- Predictive Maintenance: DGX systems analyze sensor data from machines and equipment to predict potential failures and schedule maintenance proactively, reducing downtime and increasing efficiency.

- Quality Control: DGX systems power AI algorithms for inspecting products for defects and ensuring quality standards are met.

- Process Optimization: DGX systems analyze production data to identify bottlenecks and optimize processes, leading to increased productivity and reduced costs.

Research

NVIDIA DGX systems are accelerating scientific discovery in various fields, including climate modeling, materials science, and astrophysics.

- Climate Modeling: DGX systems enable researchers to develop more accurate and detailed climate models, helping to understand and predict climate change.

- Materials Science: DGX systems accelerate the discovery of new materials with specific properties by simulating complex molecular interactions.

- Astrophysics: DGX systems power AI algorithms for analyzing astronomical data, leading to new discoveries about the universe.

| Use Case | Applications |

|---|---|

| Healthcare | Medical imaging analysis, drug discovery, precision medicine |

| Finance | Fraud detection, risk management, algorithmic trading |

| Manufacturing | Predictive maintenance, quality control, process optimization |

| Research | Climate modeling, materials science, astrophysics |

Performance and Scalability

NVIDIA DGX systems are designed to deliver exceptional performance and scalability for demanding AI workloads. They achieve this through a combination of powerful hardware components, optimized software, and a flexible architecture that allows for both horizontal and vertical scaling.

Compute Power

NVIDIA DGX systems are equipped with multiple NVIDIA A100 Tensor Core GPUs, providing massive parallel processing capabilities. These GPUs offer a significant performance advantage for AI tasks, particularly deep learning, due to their specialized Tensor Cores, which accelerate matrix multiplications, a fundamental operation in deep learning algorithms. The number of GPUs in a DGX system can be scaled to meet the specific computational requirements of a project.

Memory Bandwidth

To handle the massive datasets used in AI training and inference, NVIDIA DGX systems feature high-bandwidth memory (HBM) and NVLink interconnects. HBM provides fast access to data, reducing the time spent fetching data from memory, while NVLink allows GPUs to communicate directly with each other, enabling efficient data sharing and reducing bottlenecks.

Storage Throughput

NVIDIA DGX systems incorporate high-performance storage solutions, such as NVMe SSDs and high-speed network interconnects, to ensure fast data access and efficient data transfer between the GPUs and storage. This enables rapid training and inference, as well as efficient data handling for large-scale AI projects.

Scalability

NVIDIA DGX systems can be scaled both horizontally and vertically to meet growing computational demands. Horizontal scaling involves adding more DGX systems to a cluster, allowing for increased processing power and parallel processing capabilities. Vertical scaling, on the other hand, involves adding more GPUs to a single DGX system, enhancing the computational power within a single node.

Benchmark Results

NVIDIA DGX systems have consistently achieved top performance in industry benchmarks, demonstrating their superiority in AI workloads. For example, in the MLPerf benchmark, a standard for measuring AI performance, NVIDIA DGX systems have achieved record-breaking results for various tasks, including image classification, object detection, and natural language processing.

Real-World Applications

NVIDIA DGX systems are widely used across industries to accelerate AI development and deployment. For example, in healthcare, DGX systems are used to train deep learning models for medical image analysis, drug discovery, and personalized medicine. In finance, they are used for fraud detection, risk assessment, and algorithmic trading. In automotive, they are used for autonomous vehicle development and traffic management.

Deployment and Management

Deploying and managing NVIDIA DGX systems involves a comprehensive process encompassing installation, configuration, and ongoing maintenance. The approach to deployment can vary based on specific needs and infrastructure, with options including on-premises, cloud-based, and hybrid environments. This section delves into the details of deploying and managing NVIDIA DGX systems, outlining best practices for optimal performance and reliability.

Deployment Options

NVIDIA DGX systems offer flexibility in deployment, catering to diverse infrastructure preferences.

- On-premises deployment involves installing and managing the DGX system within your own data center. This provides complete control over the hardware and software, offering high levels of security and customization. However, it also necessitates significant upfront investment and ongoing maintenance responsibilities.

- Cloud-based deployment leverages cloud service providers like AWS, Azure, or GCP to host the DGX system. This approach eliminates the need for on-premises infrastructure, offering scalability and pay-as-you-go pricing. However, it may introduce latency concerns and limitations in customization compared to on-premises deployments.

- Hybrid deployment combines the advantages of both on-premises and cloud-based approaches. This option allows you to deploy specific components of the DGX system on-premises while leveraging cloud services for other aspects, such as storage or compute resources. This approach offers a balanced solution, allowing for greater flexibility and control while benefiting from the scalability of cloud services.

Deployment and Management Process

Deploying and managing an NVIDIA DGX system involves a structured process that ensures optimal performance and reliability. The following steps Artikel the key stages involved:

- Planning and Design: This initial phase involves defining your specific needs and objectives for the DGX system. Consider factors such as the type of workloads, required compute power, storage capacity, and desired level of security. Based on these factors, you can choose the appropriate DGX system configuration and deployment option.

- Hardware Installation: Once the DGX system arrives, it needs to be physically installed in your chosen environment. This involves connecting the system to power, networking, and storage devices. Ensure proper ventilation and cooling to maintain optimal system performance.

- Software Installation and Configuration: The next step involves installing and configuring the necessary software on the DGX system. This includes the operating system, NVIDIA drivers, and software frameworks such as CUDA, cuDNN, and TensorRT. The configuration process involves customizing settings to optimize performance for your specific workloads.

- Performance Optimization: After initial deployment, it’s crucial to optimize the DGX system for peak performance. This involves fine-tuning parameters, such as memory allocation, CPU and GPU utilization, and network bandwidth. Utilize tools like NVIDIA’s performance analysis tools to identify and address potential bottlenecks.

- Monitoring and Maintenance: Ongoing monitoring is essential to ensure the DGX system operates smoothly. Regularly track key metrics like CPU and GPU utilization, memory usage, network throughput, and system temperature. Implement proactive maintenance practices, such as software updates, security patches, and hardware checks, to prevent issues and maintain optimal performance.

Best Practices for Management

Maintaining optimal performance and reliability of your NVIDIA DGX system requires adhering to best practices:

- Regular Software Updates: Keep the DGX system’s operating system, NVIDIA drivers, and software frameworks up-to-date. Updates often include performance enhancements, security patches, and bug fixes.

- Monitoring and Logging: Utilize monitoring tools to track key performance indicators and system health. Implement logging mechanisms to capture system events and troubleshoot potential issues.

- Backups and Disaster Recovery: Regularly back up your data and configurations to prevent data loss in case of system failures. Develop a disaster recovery plan to ensure rapid system restoration in the event of unforeseen incidents.

- Security Best Practices: Implement robust security measures, such as strong passwords, access control policies, and network firewalls, to protect your DGX system from unauthorized access and cyber threats.

Security and Reliability

NVIDIA DGX systems are designed with robust security features and reliability mechanisms to ensure the protection of sensitive data and the smooth operation of demanding AI workloads. These systems are built to withstand failures and maintain continuous operation, crucial for mission-critical applications.

Security Features

NVIDIA DGX systems incorporate a comprehensive set of security features to safeguard data and applications. These features include:

- Secure Boot: This feature ensures that only trusted operating systems and applications are loaded on the system, preventing unauthorized software from gaining access.

- Hardware-based Security: NVIDIA DGX systems leverage hardware-based security mechanisms, such as Trusted Platform Module (TPM) and Secure Encrypted Virtualization (SEV), to protect sensitive data and prevent unauthorized access.

- Secure Networking: The systems support secure network protocols like TLS/SSL, ensuring data confidentiality and integrity during transmission.

- Access Control: NVIDIA DGX systems provide granular access control mechanisms to restrict access to sensitive data and applications based on user roles and permissions.

- Data Encryption: NVIDIA DGX systems support data encryption at rest and in transit, protecting sensitive information from unauthorized access.

- Security Monitoring and Auditing: NVIDIA DGX systems offer comprehensive security monitoring and auditing capabilities, allowing administrators to track system activity and detect potential security threats.

Reliability Mechanisms

NVIDIA DGX systems employ various reliability mechanisms to ensure high availability and minimize downtime. These mechanisms include:

- Redundant Power Supplies: NVIDIA DGX systems are equipped with redundant power supplies, ensuring uninterrupted operation even in case of power supply failures.

- Redundant Network Connections: The systems support multiple network connections, providing failover capabilities in case of network outages.

- Fault-Tolerant Storage: NVIDIA DGX systems use fault-tolerant storage solutions, such as RAID configurations, to protect data from disk failures.

- System Monitoring and Management: NVIDIA DGX systems provide comprehensive monitoring and management tools, allowing administrators to proactively identify and address potential issues before they impact system performance.

- Software Updates and Patches: NVIDIA regularly releases software updates and security patches to address vulnerabilities and improve system security and reliability.

Security-Sensitive Applications

NVIDIA DGX systems are deployed in various security-sensitive applications and environments, including:

- Healthcare: In healthcare, NVIDIA DGX systems are used to process sensitive patient data for tasks like medical imaging analysis, drug discovery, and personalized medicine.

- Financial Services: NVIDIA DGX systems are employed in financial institutions for fraud detection, risk assessment, and algorithmic trading, requiring high levels of security and reliability.

- Government and Defense: NVIDIA DGX systems are used by government agencies and defense organizations for tasks like intelligence analysis, cybersecurity, and national security.

Security and Reliability Features

| Feature | Description |

|---|---|

| Secure Boot | Ensures only trusted software is loaded, preventing unauthorized access. |

| Hardware-based Security | Leverages TPM and SEV to protect sensitive data and prevent unauthorized access. |

| Secure Networking | Supports secure network protocols like TLS/SSL for data confidentiality and integrity. |

| Access Control | Provides granular access control mechanisms based on user roles and permissions. |

| Data Encryption | Supports data encryption at rest and in transit to protect sensitive information. |

| Security Monitoring and Auditing | Offers comprehensive security monitoring and auditing capabilities for threat detection. |

| Redundant Power Supplies | Ensures uninterrupted operation even in case of power supply failures. |

| Redundant Network Connections | Provides failover capabilities in case of network outages. |

| Fault-Tolerant Storage | Protects data from disk failures using RAID configurations. |

| System Monitoring and Management | Allows administrators to proactively identify and address potential issues. |

| Software Updates and Patches | Regularly released to address vulnerabilities and improve security and reliability. |

Future Trends and Innovations

The landscape of AI and high-performance computing is constantly evolving, driven by breakthroughs in hardware, software, and algorithms. NVIDIA DGX systems are at the forefront of this evolution, playing a crucial role in accelerating scientific discovery, driving innovation, and shaping the future of AI.

Emerging Trends and Advancements

The field of AI is experiencing rapid advancements, fueled by the convergence of several key trends:

- Generative AI: The rise of generative AI models like large language models (LLMs) and diffusion models is transforming how we interact with technology. These models are capable of creating realistic and creative content, including text, images, audio, and video. NVIDIA DGX systems are essential for training and deploying these complex models.

- Multimodal AI: The ability to process and understand multiple forms of data, such as text, images, and audio, is becoming increasingly important. Multimodal AI models are capable of performing tasks that require a deeper understanding of the world, such as image captioning, speech recognition, and machine translation.

- Edge AI: As AI applications become more pervasive, the need for edge computing solutions is growing. NVIDIA DGX systems are being deployed at the edge to enable real-time AI inference and decision-making in various industries, from healthcare to manufacturing.

- Quantum Computing: Quantum computing holds the potential to revolutionize AI and scientific research. NVIDIA is actively exploring the integration of quantum computing with its DGX systems to unlock new possibilities in areas like drug discovery and materials science.

Potential Future Applications and Use Cases

The capabilities of NVIDIA DGX systems are constantly expanding, opening up new possibilities across various domains:

- Personalized Medicine: DGX systems can be used to analyze vast amounts of medical data, enabling personalized treatment plans, early disease detection, and drug discovery.

- Autonomous Vehicles: DGX systems are crucial for training and deploying the AI models that power self-driving cars, enabling them to navigate complex environments safely and efficiently.

- Climate Change Research: DGX systems can be used to model climate patterns, analyze environmental data, and develop solutions to mitigate the effects of climate change.

- Financial Modeling: DGX systems can be used to analyze market trends, predict financial risks, and optimize investment strategies.

NVIDIA’s Roadmap for Future DGX System Development and Innovations

NVIDIA is committed to pushing the boundaries of AI and high-performance computing. The company’s roadmap for future DGX system development includes:

- Next-Generation GPUs: NVIDIA continues to innovate with its GPU architecture, delivering significant performance gains and efficiency improvements with each new generation.

- Advanced Interconnect Technologies: NVIDIA is investing in high-speed interconnects, such as NVLink and NVSwitch, to enable seamless communication between GPUs and other components within DGX systems.

- Software Optimization: NVIDIA is continuously optimizing its software stack, including CUDA, cuDNN, and TensorRT, to ensure maximum performance and efficiency for AI workloads.

- Ecosystem Expansion: NVIDIA is expanding its ecosystem of partners and developers, providing access to a wider range of tools, libraries, and frameworks for AI development.

Key Future Trends and Innovations Related to NVIDIA DGX Systems

- AI-Powered Supercomputing: DGX systems are becoming increasingly powerful, enabling the development of AI models that require massive computational resources.

- Edge-to-Cloud AI: NVIDIA is developing solutions that seamlessly connect edge devices with cloud-based AI services, enabling distributed AI applications.

- AI for Sustainability: DGX systems are being used to develop AI solutions that address environmental challenges, such as renewable energy optimization and climate modeling.

- AI for Social Good: DGX systems are being used to develop AI solutions that address societal issues, such as healthcare, education, and poverty reduction.

Epilogue

NVIDIA DGX systems are revolutionizing the way we approach AI, offering a powerful and scalable platform to fuel innovation across industries. Whether you’re a researcher pushing the boundaries of scientific discovery or an enterprise seeking to leverage AI for competitive advantage, DGX systems provide the tools and performance necessary to achieve your goals.

Nvidia DGX systems are powerful tools for accelerating AI development, offering incredible processing power for training complex models. While these systems are designed for high-performance computing, there’s a parallel to be drawn with the creative process of diy shoes , where individual components are carefully assembled to create something unique.

Just as Nvidia DGX systems allow researchers to build sophisticated AI models, DIY shoemaking empowers individuals to express their creativity and style through custom footwear.